Provisioning Consul with Puppet

Coming up: A couple of posts about incorporating Consul for service discovery and Spring Boot for microservices in an ecosystem that isn’t quite ready for Docker. In this post, I’ll cover provisioning (and wiring up) a consul cluster and a set of Spring Boot applications with Puppet. In the next post, I’ll show you how to start using some of Spring Actuator and Codahale Metrics’ goodness to stop caring so much about the TLC that went into building up any particular VM.

Sometimes I hate that I work for a company with a bunch of products in production, or else that we have a ton of new ideas in the pipeline. It would be preferable if we could start from scratch with what we know now, or if we could spend the next decade on a complete rewrite.

Alas we do have applications running on stogy old long-lived VMs which customers rely on and grand ideas for how to solve other problems these customers have.

Without dropping terms like “hybrid cloud” (oops,) I’m going to outline one approach for introducing some cloud flavor into an ecosystem with roots in long lived, thick, VMs. Without going “Full Docker,” we can still start reducing our dependence on the more static way of doing things by introducing service discovery and targeted/granular/encapsulated services (sorry, I was trying to avoid the term “Microservice”).

Since I’d rather show you an example than espouse a bunch of ideas (despite the diatribe you’ve been reading or ignoring thus far,) from here on out I’ll be limiting my discussion to: using Puppet to build out a stack of Spring Boot microservices which utilize Consul for service discovery.

Get your feet wet

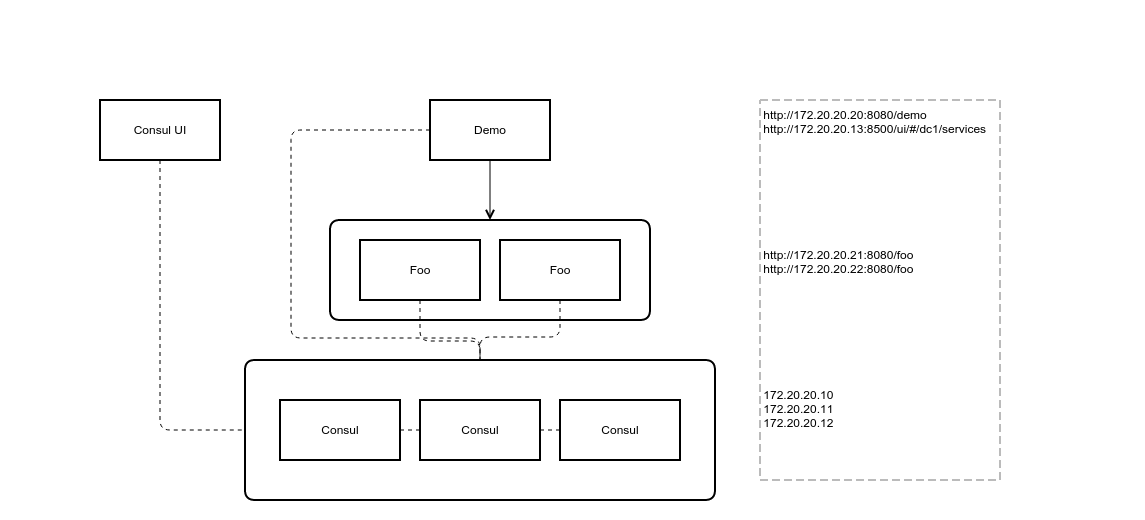

Before we get started, this whole example is available on Github in the form of a Vagrant Stack. Just follow the instructions in the README (or keep reading) and you’ll have a production-ish set of VirtualBox VMs doing their thing: three Consul server nodes clustered up, a UI to track your Consul cluster state, a Spring Boot demo application, and a pair of Spring Boot back-end services (named “foo”) for the demo app to discover and utilize.

Get your stack running:

git clone https://github.com/benschw/consul-cluster-puppet.git

cd consul-cluster-puppet

./deps.sh # clone some puppet modules we'll be using

./build.sh # build the Spring Boot demo application we'll need

vagrant up

Try out the endpoints:

p.s. The IPs are specified in the Vagrantfile, so these links will take you to your stack

p.p.s. If you want to play around with Consul some more, take a look at this post; a similar example where I focus more on what’s provided by Consul.

Getting your Puppet on

There are limited distinctions required for different instances of our nodes, all of which can be enumerated as properties rather than included in our puppet code. We will leverage existing puppet modules to do the heavy lifting for us and factor out any node/app specific configuration into Hiera configs.

Modules

All that we have to do is compose other people’s work into a stack.

- solarkennedy/puppet-consul - provisions our Consul cluster and the Consul client agents registering/servicing our applications.

- rlex/puppet-dnsmasq - routes consul DNS lookups to the consul agent (I.e. If our app looks something up that ends in “.consul” it routes through localhost:8600 – the consul agent.)

Hiera

In a Hiera config, we can specify an address by which to reach the Consul server cluster and a name to label each application booting into our stack.

(You have to find consul somehow - whether you’re a server seeking to join the cluster, or a client trying to register or find a service. In the real world we would treat these cases differently and incorporate a load balancer or at least a reverse proxy; but let’s just call this our single point of failure and move on. So we dump 172.20.20.10 as the consul server node everyone joins to in common.yaml.

Ok, saying “single point of failure” makes me too uncomfortable and I can’t just move on. Instead, imagine me muttering something about a hostname and then blurting out “Excercise for the reader!”.)

In addition to common.yaml is a config for each node that specifies a “svc_name” identifying each service we are provisioning. There is one “demo” instance and two “foo” instances. This name is caught by the consul service define and used to identify the application when registering with consul.

Thus…

Consul Server

class { 'consul':

# join_cluster => '172.20.20.10', # (provided by hiera)

config_hash => {

'datacenter' => 'dc1',

'data_dir' => '/opt/consul',

'log_level' => 'INFO',

'node_name' => $::hostname,

'bind_addr' => $::ipaddress_eth1,

'bootstrap_expect' => 3,

'server' => true,

}

}

Consul Client

class { 'consul':

config_hash => {

'datacenter' => 'dc1',

'data_dir' => '/opt/consul',

'log_level' => 'INFO',

'node_name' => $::hostname,

'bind_addr' => $::ipaddress_eth1,

'server' => false,

'start_join' => [hiera('join_addr')], # (...hiera)

}

}

Consul Service Definition

consul::service { $service_name: # (indirectly provided by hiera... look at app.pp)

tags => ['actuator'],

port => 8080,

check_script => $health_path,

check_interval => '5s',

}

Dnsmasq

include dnsmasq

dnsmasq::dnsserver { 'forward-zone-consul':

domain => "consul",

ip => "127.0.0.1#8600",

}

Etc.

There’s more to it then that, but including installing the demo jar and its init scripts for our example, it’s pretty straight forward. Take a look at the code.

The three files app.pp, server.pp, and webui.pp represent the three roles our various nodes fulfill. The consul server agents are all “servers”, the consul web ui is a “webui,” and all of our spring apps (both the demo and the foo services) are “apps.”

Since our goal is to make the redundant copies (duplicate instances of “foo” and “server”) as well as the details for discovering their addresses (the distinctions between “demo” and “foo” nodes) generic, it makes sense that there is no differences in how we provision them.

Moving On

Those VMs have served you well; they’ve been with you with you in sickness and in health… but also vise versa. At this point, most people agree that little is gained by anthropomorphizing your servers. But most people also still have enough invested in them that it’s not totally intuitive how to move on.

Introducing service discovery and microservices to your stack is one approach. Services like Consul work well as a decoupled system to augment an existing stack. You can easily layer it on, start relying on it for new applications, but not have to invest time or money retrofitting your old software.

In my next post I’ll be going over how the Spring Boot application framework fits into this brave new world. Don’t let the Java name-drop fool you however, it’s still a infrastructure post. I’ll be covering health checks and metrics, or “How to know when stuff’s fracked and how to do something about it.”

comments powered by Disqus